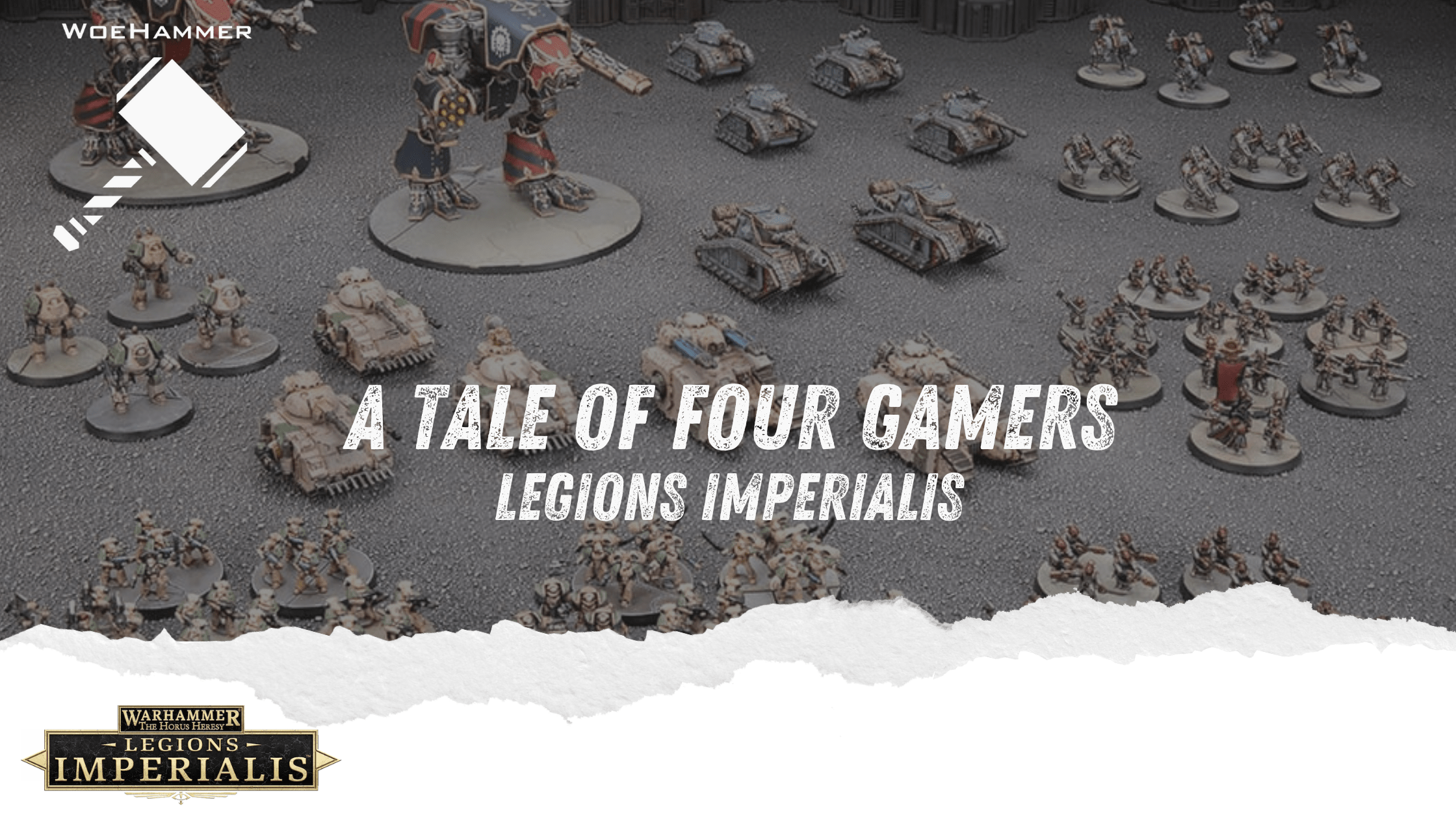

My Dive into the ‘Tale of Four Gamers’ PDF: Did It Fix My Screw Up?

Man, sometimes you just stumble onto something that looks important, right? This whole ‘Tale of Four Gamers’ PDF started popping up on a few feeds, folks whispering about it being the secret sauce for understanding player churn and scaling backend architecture for game systems. I saw the title, downloaded the thing instantly, and figured I needed to dig in. Why? Because I had just absolutely flubbed a deployment, and I was desperate.

I’m going to tell you the story of what I did with this document—the whole messy process of tearing it apart—and then tell you if it actually helped me climb out of the hole I dug for myself.

The Mess I Was In: Why I Needed This PDF

Let me backtrack a bit. Last fall, we launched this new feature—a big, competitive PvP ladder—and my team was responsible for the matchmaking service and the database scaling behind it. I signed off on the scaling strategy. Big mistake. On launch day, the first time concurrent users spiked past the 50k mark, the whole system just coughed and died. Matchmaking froze. Players started seeing error screens. The system didn’t just slow down; it flat-out stopped processing requests.

I remember sitting there, staring at the metrics dashboard that was pure red, feeling my stomach drop through the floor. The CTO dragged me into a conference room and didn’t yell—that would have been better—he just looked disappointed. He told me to fix it, find the root cause, and make sure it never happened again. If I couldn’t, well, I knew what that meant.

So, for the next three weeks, I was basically nocturnal. I pored over logs, I rewrote config files, and I frantically searched online for anyone who had solved this specific “concurrent player choke” issue without just throwing infinite hardware at it. That’s when I tripped over this PDF, the ‘Tale of Four Gamers.’ It promised to categorize player behavior (The Achiever, The Socializer, The Explorer, The Killer) and, crucially, map those behaviors to database load profiles. Sounded exactly like the smart answer I needed.

My Practice: Dissecting the Document and Testing Its Claims

The PDF is massive, like 80 pages of dense, academic-sounding stuff, with diagrams that look like circuit boards. I didn’t just read it; I treated it like a mandatory college course. I printed the whole thing out and started highlighting and scribbling notes in the margins.

Here is how I implemented my review process:

- I mapped out the key hypotheses: The PDF claimed that ‘Achievers’ caused predictable spikes in write operations because they focused on competitive scores, while ‘Explorers’ caused scattered, unpredictable read operations accessing obscure parts of the world state.

- I isolated our actual player data: I went back to the logs from the failure event and tried to categorize the failed requests based on the player types the PDF described. This took days, just manually classifying player actions.

- I threw together a minimal system mock-up: I used a test environment, not the live one, obviously. I coded four simple bots, each designed to simulate one of the “four gamers” behaviors as described in the PDF. One bot hammered the score tables, one just wandered and pulled random inventory data, and so on.

- I ran load tests using the bot profiles: I didn’t use standard stress tests. I used the load patterns the PDF said I should expect. I wanted to see if the system failed where the paper predicted it would.

What I uncovered immediately was confusion. The PDF painted these behaviors as neat boxes. But my real-world data? It was a soup. Our players were messy. They were ‘Achievers’ on Monday, ‘Socializers’ on Tuesday, and sometimes they were doing all four things at once. The models in the PDF felt too clean, too theoretical, like they were written by someone who had never seen a thousand angry teenagers trying to log in simultaneously.

The Verdict: Was the Reading Worth the Sleepless Nights?

After a week of intense simulation, I had a working theory that was different from the PDF’s primary conclusion. The PDF focused too much on what players did. I realized the failure wasn’t just about the type of request; it was about the frequency and dependency chaining of those requests, which the PDF only touched on briefly.

I leveraged the PDF’s framework to understand player motive, but I had to discard its architectural recommendations. They were too generalized. I ended up implementing a specific caching layer for the ‘Explorer’ data—the part that caused scattered reads—which decoupled it from the highly contentious ‘Achiever’ write database.

So, is the ‘Tale of Four Gamers’ PDF worth reading? Yes, but not for the reasons you might think.

- It gives you a fantastic vocabulary to discuss player motive with the game designers.

- It forces you to think deeper than just “we need more RAM.” It makes you categorize load.

- But it won’t give you the answer. It’s a starting point. If you treat it like a technical blueprint, you’re going to fail. You have to adapt it to your own specific pile of player madness.

I eventually fixed the system. The CTO was happy. I didn’t get fired. But the biggest lesson I dragged out of that whole nightmare wasn’t in the PDF itself; it was learning that even the smartest-sounding theoretical documents are just stepping stones. You still have to do the hard work of tailoring the theory to the dirt and grime of your own production environment. Read it, dissect it, but trust your own data more.