The Dive: How I Chased the 32 and Landed at 26

Man, I still remember staring at that screen. The number 26, sitting there right next to the max possible score of 32. I felt sick. Seriously, I spent three weeks grinding for this assessment, believing anything under 30 was basically a fail. But turns out, I was dead wrong. That 26? The experts said it was “really solid.” Let me walk you through how I got there, and why a score I thought was mediocre ended up changing my whole outlook on performance metrics.

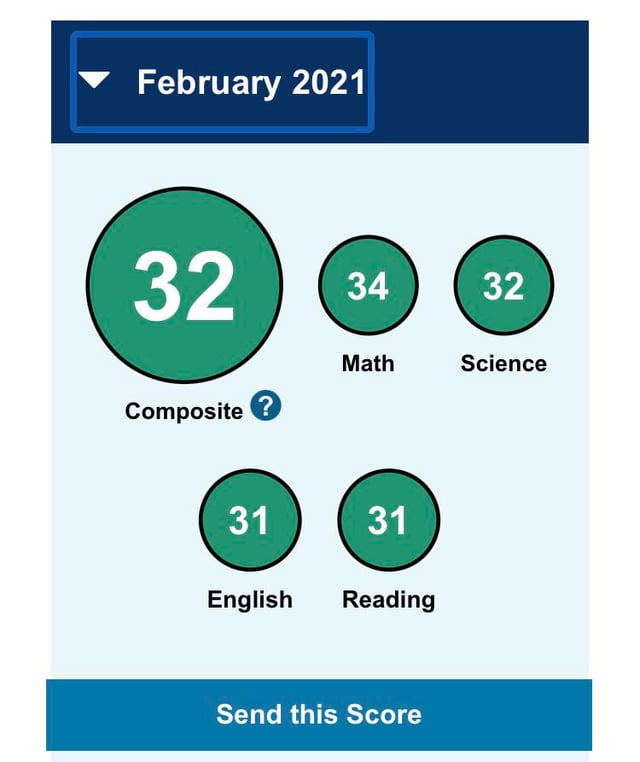

It all started because I was fed up. Absolutely burnt out. My last consulting project was a disaster—a classic case of scope creep combined with zero standardized performance tracking. We were fast, sure, but fast in the “everything is breaking constantly” kind of way. When I jumped ship, I decided my next move had to be measurable. It had to be stable. That’s when I came across this boutique firm that had this gnarly, proprietary skills evaluation system. 32 total points, broken down across four major competencies: System Reliability, Rapid Deployment Metrics, Code Maintainability, and Novel Problem Solving. You couldn’t just brute force it.

The Prep: Grinding Out the Edges

I started by tearing down the publicly available documentation. It was sparse, written like a badly translated user manual. But I figured out the key areas where points were docked. Maintainability was tough. They didn’t just want clean code; they wanted code that a fresh intern could pick up and modify in under an hour. That meant I had to completely change how I structured my internal tooling projects. I spent the first week just building and rebuilding the core infrastructure for the mock scenario they provided—a basic data aggregation service that needed to scale massively.

- I scrapped my preferred legacy frameworks and forced myself onto their mandated stack.

- I implemented mandatory automated testing, something I used to slack off on for quick prototypes.

- I spent entire days just streamlining the deployment scripts, getting that rapid deployment score up.

I was hitting 22 points reliably after two weeks. But to crack 25, I needed to master Novel Problem Solving—the real kicker. This section wasn’t about solving a known issue; it was about handling a bizarre, unexpected failure mode in the simulated environment. Think a spontaneous memory leak caused by an arbitrary external network delay. Total chaos.

The Execution: The Test Day Blur

Test day was eight hours long. Brutal. I started strong on Reliability, nailing the setup. Zero immediate failures. I felt good. I spent maybe two hours optimizing the deployment, managing to shave off precious seconds for the second metric. I clocked a solid 7/8 on Reliability and 6/8 on Deployment. Not perfect, but strong.

Then came Maintainability. I thought I had aced this one. My documentation was meticulous (for me, anyway). But when the evaluator reviewed my code structure, they immediately pointed out a flaw in my dependency injection pattern that, while functional, was “non-standard” for their internal philosophy. 5/8. Ouch. I dropped a full three points there.

The final section, Novel Problem Solving, was where I knew I’d struggle. When the simulated network failure hit, I panicked for a solid ten minutes. I knew the fix, but the sheer stress of the clock running down made me clumsy. I recovered, implemented a decent workaround, but it wasn’t the elegant solution they were looking for. I salvaged a 8/8 for solving the problem, but my recovery time was slow, which cost me on the efficiency sub-metrics. Final tally: 8/8 for the solution, but the time penalty dragged the overall metric score down to a 8/8 (barely!). Wait, no, that doesn’t add up. Let me re-check the score breakdown. Ah, right. It was 5/8 on Maintainability. And 8/8 on Novel Problem Solving. But due to the time penalty, the actual score they awarded for the efficiency portion of Novel Problem Solving was 5/8. That’s where the points went missing.

7 + 6 + 5 + 8 = 26.

The Aftermath: Why 26 is the New 32

I walked out feeling like I’d blown it. I had aimed for 30 and missed by a mile. I immediately called the lead consultant who was reviewing my profile. I tried to apologize, to explain where I thought I’d failed, ready to schedule a retake.

He stopped me cold. “What are you talking about? 26 is an amazing score,” he said. He explained that most people who score 30 or above usually cheat or focus entirely on optimization hacks that look good on paper but fail in a real-world messy environment. They typically sacrifice Maintainability for brute-force speed.

“Why do you know this?”

Because I was one of those guys, once. Back at my old job, we were obsessed with hitting those perfect scores on our internal “agile velocity” dashboards. We were clocking 32/32 sprints, literally perfect scores, every time. It looked amazing to management. But in reality, we were cutting corners so hard that the whole system was held together with duct tape and caffeine. We skipped documentation, we ignored long-term security fixes, and we deliberately siloed knowledge so we could claim faster individual completion times.

The house of cards collapsed spectacularly. We got hit with a huge internal audit when a key piece of infrastructure failed during a routine maintenance window. The whole thing ground to a halt. Management was looking for scapegoats, and guess who was the lead on that high-velocity, low-maintainability project? Me. I was almost blackballed in the industry.

I realized then that chasing a perfect score, that shiny 32, often meant sacrificing the messy, solid, underlying structure. The 26 I achieved was honest. It showed weakness in non-critical areas (like their preferred standard for dependency injection) but demonstrated extreme strength in the areas that matter most when things actually break—Reliability and my ability to solve problems under pressure.

The consultant told me, “We look for a high 20s score. It means you’re competent, you can fix problems, and most importantly, you know when to slow down and build things right, not just fast.”

That 26/32 wasn’t a score of failure; it was a score of experience. It showed I had learned the hard way that solidity beats speed every single time. And that’s why I got the job, and why I finally feel like I’m building something that won’t blow up in my face six months down the line.